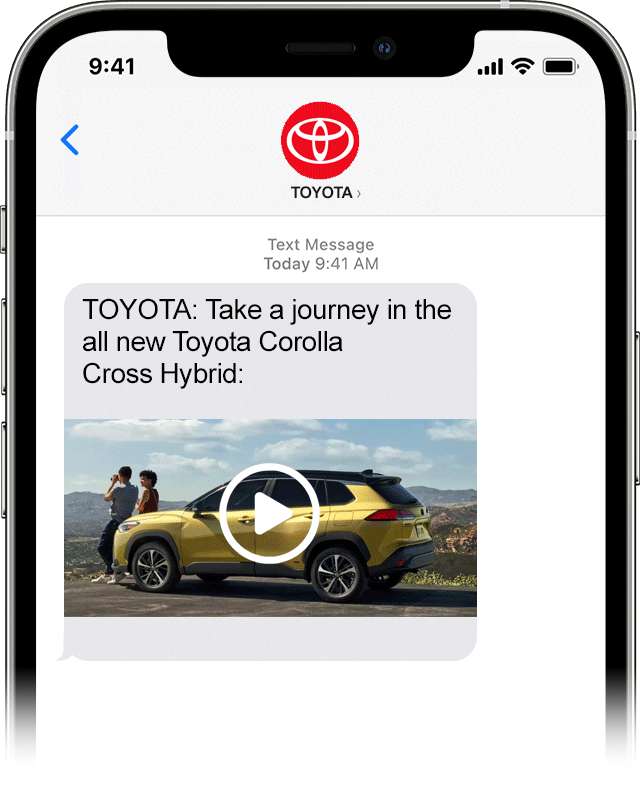

This week, it was announced that Apple is adopting RCS (Rich Communications Service) as a standard feature on its next iOS version. RCS is aptly named, as it does enable more rich forms of communication to be sent over the texting network. (Textwork? Nextext? Just spitballing.) Now, instead of just text in a blue – sometimes green – bubble, or the occasional animated GIF from friends or bit.ly link from brands, images and videos can now be shared, and even interactive features.

Obviously, this news is rife with marketing and business implications. A survey from Juniper Research, a UK-based telecom research firm, found that “business messaging traffic will grow from $1.3 billion to $8 billion in 2025.” Wowzers. That’s a lot. And soon. Some of that growth is to be realized in no small way by Apple’s 900 million devices entering the fray.

RCS is also notable for providing end-to-end encryption so that messages can’t be intercepted. Apple, who has been prickly about privacy, especially as it relates to marketing via mobile devices, probably saw this is an opportunity to deliver more robust services to iPhone users while toeing the line of its newer, harsher security stance.

As it relates to marketing, the possibilities seem both endless and exciting. More rich media often holds the door open for more robust and interactive engagements. Surveys. Games. Direct app downloads. Oh my!

So, could this be a kind of renaissance moment for the oft-maligned outpost known as direct marketing? Methinks perhaps. Instead of just offering the standard “reply STOP to opt-out” or “1 to reply YES” options, recipients of RCS messages can now explore the brands’ text-messaged offerings in private, low-risk interactions and decide (if the brands do this correctly) on a number of engagement pathways.

So everybody wins: brands get to design and deliver more interesting and more entertaining features directly to consumers to increase engagement and drive whatever metrics they’re chasing. Consumers get to engage with cooler marketing tactics while still feeling in control of the conversation (remember, you can opt out or just delete anytime you like.) Heck, direct marketing wins by getting a slick, new, digital shot in the arm.

But the real winners? It’s the carriers.

That’s right. AT&T, Verizon, T-Mobile. At least in the United States, they stand to gain most from this boon since they’ll be double-dipping their way to some of that 4X growth predicted by Juniper in their report.

For Dip 1, it will cost brands more to send these richer engagements across the texting network (Textnet? TheNextwork? Still working on ideas.) through the various third party mass texting platforms that enable them. Because the platform rates will go up on a per-message basis as well to cover the increased carrier fees. Hmmm.

And for Dip 2, carriers will quietly pass additional fees on to consumers on their monthly bills. That old “text and data fees may apply” disclaimer is now going to cost a titch more than it used to the more you start opting in for these newer, brighter, more colorful and more animated engagements. The fees will be nominal to each consumer, but across these networks of hundreds of millions of subscribers, it will amount to some delicious over-the-transom revenue from both sides of the marketing equation. And with no additional infrastructure costs.

Well done, you sneaky little bastards.